arrch64 编译安装tensorflow1.15+npu+tfAdapter

因为要用昇腾芯片,故写此文

写在前面

服务器上的离奇问题碎碎念 1 conda虚拟环境下pip仍安装到全局

在此之前,还经历了一个离奇事件,即conda虚拟环境下pip仍安装到全局的问题,敲pip -V,始终指向全局安装的pip,把解决过程记录下来

搜索到csdn和Stack Overflow的类似情况,但没有完全解决我的问题

可以看到下图中$HOME下.local的site-packages出现在了miniconda虚拟环境的前面,userbase和usersite也都指向他,不知道是哪个小天才在home目录下安的pip(这个账号好多人在用),因此$HOME下.local的pip就会优先蹦出来,故参考第一篇文章进行了修改,问题暂时解决

但这是由于我在.bashrc里绕过了在全局/usr/bin下的python的环境变量(写在/etc/profile里了),具体思路是新建若干变量,把修改前的PATH、LD_LIBRARY_PATH、PYTHONPATH变量赋值过去,然后在bashrc里面$这几个变量,这样/etc/profile下的python环境变量在我这个用户底下就不会生效了(但这由莫名其妙导致别人ssh无法连接到服务器了0.0)还好我还连着服务器,故速速复原回去,然后再一看,发现/usr/bin下的pip蹦出来出现在sys.path了,而且位置灰常靠前

于是大义灭亲,直接将和site-packages路径相关的环境变量,即$PYTHON置为空,才不出错

但新建一个conda环境,发现$HOME下.local的site-packages又蹦出来了

这回我学聪明了,直接修改miniconda/envs/{虚拟环境名}/lib/python3.7/site.py下

ENABLE_USER_SITE = False保存后再次确认,成功(user site还在,但是我们不使用他了,很河里)

服务器上的离奇问题碎碎念 2 bash:/{}/python:bad interpreter: invalid argument

此时隔壁小哥遇到新的问题:pip uninstall一堆包后,虚拟环境下的python就崩了(bad interpreter),我们的心态也崩了呜呜呜,类似问题在这里:

有人尝试用dmesg来找内核错误,但我们没权限= =,进入bin目录下,手动运行./python3.x 的话,同样会提示bad interpreter: invalid argument的错

因此做了波神奇的操作,将./python3.x复制一个其他名称,如./python3.x-2,此时再运行./python3.x-2,就可以正常进入交互界面了

然后又尝试把./python3.x-2的名字再改回./python3.x,咦,不报错了(就离谱!!!!!)虽然说是权宜之计吧,但我们真的不想折腾了

正文

参考文章:

EulerOS aarch64系统确保所有依赖的包和环境都安装好,特别是numpy的版本要安对,不然后面会报错!

接下来需要参考下面两篇文档进行tf的编译安装

首先,由于tensorflow依赖h5py,而h5py依赖HDF5,需要先编译安装HDF5,否则使用pip安装h5py会报错,以下步骤以root用户操作。

由于tensorflow依赖grpcio,因此建议在安装tensorflow之前指定版本安装grpcio,否则可能导致安装失败。

编译安装HDF5

使用wget下载HDF5源码包,可以下载到安装环境的任意目录,命令为:

wget https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.10/hdf5-1.10.5/src/hdf5-1.10.5.tar.gz --no-check-certificate进入下载后的目录,解压源码包,命令为:

tar -zxvf hdf5-1.10.5.tar.gz进入解压后的文件夹,执行配置、编译和安装命令:

cd hdf5-1.10.5/

./configure --prefix=/usr/include/hdf5

make install 配置环境变量并建立动态链接库软连接:

配置环境变量(个人目录下的~/.bashrc或全局下的/etc/profile,建议前者)

export CPATH="/usr/include/hdf5/include/:/usr/include/hdf5/lib/"建立动态链接库软连接(需要root)

ln -s /usr/include/hdf5/lib/libhdf5.so /usr/lib/libhdf5.so

ln -s /usr/include/hdf5/lib/libhdf5_hl.so /usr/lib/libhdf5_hl.so安装Cython

pip install Cython安装h5py

pip install h5py==2.8.0安装grpcio

pip install grpcio==1.32.0注意检查pip是否安装在当前环境下!

安装pip 软件包依赖项

pip install -U --user pip numpy wheel

pip install -U --user keras_preprocessing --no-deps安装 Bazel

建议是让我安Bazelisk,吹嘘他很牛,能帮我自动安装,然后download时说证书不对,不给我下,于是我按他提示的版本去github下了个二进制版本的可执行bazel(一开始下的5.2.0,其实要下0.26.1或更旧版本)

5.2.0

切root:

chmod +x bazel-5.2.0-linux-arm64

mv bazel-5.2.0-linux-arm64 /usr/local/bin/bazelbazel就安好了,敲bazel version确认下即可

然后神奇的地方就来了,我继续开始按tf官网configure(这个见后面),报错:

逆天啊,我都安完了,告诉我版本太新,让我退退退

0.26.1

于是老老实实安装旧版,过程参考官方文档,手动编译 bazel-0.26.1-dist.zip 文件(因为旧版没有可直接运行的二进制文件!)

发现还要jdk8环境?????我傻了呀,那就去官网down一个

然后把下好的压缩包放在自己的目录下(我是放在我的$HOME下了),然后

tar -zxvf jdk-8u333-linux-aarch64.tar.gz

vim ~/.bashrc然后把bin目录添加进环境变量即可

JAVA_HOME=/home/jdk1.8.0_333

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jarjava -version一切正常,继续编译Bazel

mkdir bazel_0.26.1

unzip -d bazel_0.26.1/ bazel-0.26.1-dist.zip

cd bazel_0.26.1

ls确认进入解压后的目录结构如下:

然后直接敲入下面的命令,欣赏解压炫酷的过场动画~(虽然难安,但很酷炫)

env EXTRA_BAZEL_ARGS="--host_javabase=@local_jdk//:jdk" bash ./compile.sh

出现这个即成功

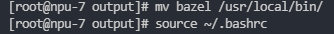

后面遇到点小问题,用户目录下bazel不生效,故把编译完的二进制文件(在output里)移到:

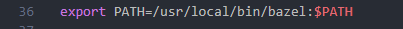

然后把环境变量

就可以了

其他软件包及环境

参考这篇文章

和这篇

下载并安装驱动:Ascend-cann-nnae_ {version} _linux- {arch} .run

和插件包:Ascend-cann-tfplugin_ {version} _linux- {arch} .run

用于tf调用npu~需要注意的是驱动需要指定目录,安在我们的home目录下,而不是/usr/local下(这个是全局的驱动,版本不是最新的)

下载tf1.15.0源码

先下载tf源码,git的话

git clone https://github.com/tensorflow/tensorflow.git

cd tensorflow然后check到需要的分支即可( branch_name改为需要的版本,这里我们需要r1.15,即昇腾支持的版本 )

git checkout branch_name 我是图省事直接在这里download了一个源码 https:// github.com/tensorflow/t ensorflow/tree/r1.15 ,因为在服务器git报一堆证书的错,不想搞哈哈哈(咳咳,一定要确认版本对不对,我下了个1.15.5的,他压缩包名字叫1.15,就很baby,版本下错了tfadapter不能用的)

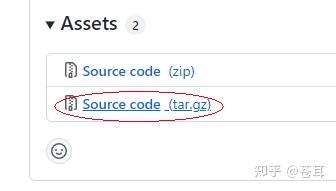

后面发现如果要下到精准的1.15.0,需要在这里

然后找到对应需要的版本

或者修改这个链接后面的数字:

https://github.com/tensorflow/tensorflow/releases/tag/v1.15.0然后进去下载源码传到服务器

或者直接

wget https://github.com/tensorflow/tensorflow/archive/refs/tags/v1.15.0.tar.gz --no-check-certificate修改tf1.15.0源码 1

在执行build之前,需要对源码进行一些修改:

首先下载“nsync-1.22.0.tar.gz”源码包

进入tensorflow的1.15.0源码目录,打开“tensorflow/workspace.bzl”文件,找到其中name为nsync的“tf_http_archive”定义:

tf_http_archive(

name = "nsync",

sha256 = "caf32e6b3d478b78cff6c2ba009c3400f8251f646804bcb65465666a9cea93c4",

strip_prefix = "nsync-1.22.0",

system_build_file = clean_dep("//third_party/systemlibs:nsync.BUILD"),

urls = [

"https://storage.googleapis.com/mirror.tensorflow.org/github.com/google/nsync/archive/1.22.0.tar.gz",

"https://github.com/google/nsync/archive/1.22.0.tar.gz",

)找一条链接把nsync-1.22.0.tar.gz下载下来,tar -zxvf 压缩包名 解压,进入nsync-1.22.0目录

注:教程中提到还会解压出一个pax_global_header文件,查了下发现是tar版本太久才会生成,所以忽略就好

编辑“nsync-1.22.0/platform/c++11/atomic.h”,在NSYNC_CPP_START_内容后添加如下红框内容

改完后,63-93行应该是这样的:

#include "nsync_cpp.h"

#include "nsync_atomic.h"

NSYNC_CPP_START_

#define ATM_CB_() __sync_synchronize()

static INLINE int atm_cas_nomb_u32_ (nsync_atomic_uint32_ *p, uint32_t o, uint32_t n) {

int result = (std::atomic_compare_exchange_strong_explicit (NSYNC_ATOMIC_UINT32_PTR_ (p), &o, n, std::memory_order_relaxed, std::memory_order_relaxed));

ATM_CB_();

return result;

static INLINE int atm_cas_acq_u32_ (nsync_atomic_uint32_ *p, uint32_t o, uint32_t n) {

int result = (std::atomic_compare_exchange_strong_explicit (NSYNC_ATOMIC_UINT32_PTR_ (p), &o, n, std::memory_order_acquire, std::memory_order_relaxed));

ATM_CB_();

return result;

static INLINE int atm_cas_rel_u32_ (nsync_atomic_uint32_ *p, uint32_t o, uint32_t n) {

int result = (std::atomic_compare_exchange_strong_explicit (NSYNC_ATOMIC_UINT32_PTR_ (p), &o, n, std::memory_order_release, std::memory_order_relaxed));

ATM_CB_();

return result;

static INLINE int atm_cas_relacq_u32_ (nsync_atomic_uint32_ *p, uint32_t o, uint32_t n) {

int result = (std::atomic_compare_exchange_strong_explicit (NSYNC_ATOMIC_UINT32_PTR_ (p), &o, n, std::memory_order_acq_rel, std::memory_order_relaxed));

ATM_CB_();

return result;

}然后用tar -zcvf ./nsync-1.22.0.tar.gz nsync-1.22.0命令,重新压缩回“nsync-1.22.0.tar.gz”源码包

重新生成“nsync-1.22.0.tar.gz”源码包的sha256sum校验码:

sha256sum nsync-1.22.0.tar.gz执行如上命令后得到sha256sum校验码(一串数字和字母的组合)

再次进入之前tf源码,打开“tensorflow/workspace.bzl”,找到其中name为nsync的“tf_http_archive”定义,其中“sha256=”后面的数字填写刚刚得到的校验码,“urls=”后面的列表第一行,填写存放“nsync-1.22.0.tar.gz”的file://索引。

假如保存在“/tmp/nsync-1.22.0.tar.gz”

则索引应该是file:///tmp/nsync-1.22.0.tar.gz,注意不要漏掉这一个"/"!!!!!

源码修改一结束

执行build

通过运行 TensorFlow 源代码树根目录下的

./configure

配置系统 build,即进入源码根目录,然后

./configure

如果使用的是虚拟环境,

python configure.py

会优先处理环境内的路径,

./configure

会优先处理环境外的路径。在这两种情况下,都可以更改默认设置,所以问题不大

期间检查相应路径是否正确,然后是否安装那些统一选大写的选项(我们后面要按昇腾自己的tfAdapter,故不需要这些东西),我的会话内容:

(ljx2) [dev211@npu-7 tensorflow-r1.15]$ python configure.py

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.26.1- (@non-git) installed.

Please specify the location of python. [Default is /home/dev211/miniconda/envs/ljx2/bin/python]:

Found possible Python library paths:

/home/dev211/miniconda/envs/ljx2/lib/python3.7/site-packages

Please input the desired Python library path to use. Default is [/home/dev211/miniconda/envs/ljx2/lib/python3.7/site-packages]

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: Y

XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: N

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: N

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: N

No CUDA support will be enabled for TensorFlow.

Do you wish to download a fresh release of clang? (Experimental) [y/N]: N

Clang will not be downloaded.

Do you wish to build TensorFlow with MPI support? [y/N]: N

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See .bazelrc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

--config=numa # Build with NUMA support.

--config=dynamic_kernels # (Experimental) Build kernels into separate shared objects.

--config=v2 # Build TensorFlow 2.x instead of 1.x.

Preconfigured Bazel build configs to DISABLE default on features:

--config=noaws # Disable AWS S3 filesystem support.

--config=nogcp # Disable GCP support.

--config=nohdfs # Disable HDFS support.

--config=noignite # Disable Apache Ignite support.

--config=nokafka # Disable Apache Kafka support.

--config=nonccl # Disable NVIDIA NCCL support.修改tf1.15.0源码 2

执行完 ./configure 之后,需要修改 .tf_configure.bazelrc 配置文件(ls是看不到的,因为是"."开头,但确实有),添加如下一行build编译选项

build:opt --cxxopt=-D_GLIBCXX_USE_CXX11_ABI=0并删除以下两行

build:opt --copt=-march=native

build:opt --host_copt=-march=native修改源码2结束,继续执行官方的编译指导步骤即可

构建 pip 软件包

在刚刚tf源代码的根目录下执行

bazel build --config=v1 --local_ram_resources=2048 //tensorflow/tools/pip_package:build_pip_package--local_ram_resources=2048设置是为了保证内存不会不足(2个G即可),如果1.15.0编译时报ERROR: Config value v1 is not defined in any .rc file,那就把--config=v1删掉(参考之前执行config时的输出,--config=v2 # Build TensorFlow 2.x instead of 1.x.,没有v1的相关描述,故我直接删掉了,但1.15.5--config=v1是不会报错的,就很怪)

然后就寄了,报了一堆错,还是github的证书问题,tnnd

ERROR: An error occurred during the fetch of repository 'bazel_skylib': java.io.IOException: Error downloading [https://github.com/bazelbuild/bazel-skylib/releases/download/0.9.0/bazel_skylib-0.9.0.tar.gz] to /root/.cache/bazel/_bazel_root/4884d566396e9b67b62185751879ad14/external/bazel_skylib/bazel_skylib-0.9.0.tar.gz: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target人麻了,开始搜索解决办法,参考

将这包wget your_package_address --no-check-certificate 下载到本地,然后更改WORKSPACE文件,例如:

wget https://github.com/bazelbuild/bazel-skylib/releases/download/0.8.0/bazel-skylib.0.8.0.tar.gz --no-check-certificate然后修改源码的workspace,将里面的url改为类似下面的(注意file://后不用漏了自己的“/”)

http_archive(

name = "bazel_skylib",

sha256 = "2ea8a5ed2b448baf4a6855d3ce049c4c452a6470b1efd1504fdb7c1c134d220a",

strip_prefix = "bazel-skylib-0.8.0",

urls = ["file://{你的路径}/bazel-skylib.0.8.0.tar.gz"],

)若仍然提示“ERROR: An error occurred during the fetch of repository 'bazel_skylib':”,则进入下一步继续修改bazel_skylib相关的文件

cd ~

grep -r bazel-skylib.0.8.0 .cache/查看 .cache 目录哪个文件引用 bazel-skylib.0.8.0.tar.gz 的url,根据返回信息,进入引用该url文件所在的目录,例如这几个:

进去后搜索bazel-skylib

修改对应位置url(注意file://后不用漏了自己的“/”)

http_archive(

name = "bazel_skylib",

sha256 = "2ea8a5ed2b448baf4a6855d3ce049c4c452a6470b1efd1504fdb7c1c134d220a",

strip_prefix = "bazel-skylib-0.8.0",

urls = ["file://{你的路径}/bazel-skylib.0.8.0.tar.gz"],

)然后出现新的类似错误,人麻了,看样子有好多包,干脆一次性全部下载(慢的话在pc下载传上去也可,建议这样做哈哈哈哈)

wget https://github.com/bazelbuild/rules_docker/releases/download/v0.14.3/rules_docker-v0.14.3.tar.gz --no-check-certificate

wget https://github.com/bazelbuild/rules_swift/releases/download/0.11.1/rules_swift.0.11.1.tar.gz --no-check-certificate

wget https://github.com/llvm-mirror/llvm/archive/7a7e03f906aada0cf4b749b51213fe5784eeff84.tar.gz --no-check-certificate然后同理切换到tf源码目录,使用打开或者 vi WORKSPACE 配置rules_docker和rules_swift的相应路径

检索rules_docker,在35行后新增(注意file://后不用漏了自己的“/”)

http_archive(

name = "io_bazel_rules_docker",

sha256 = "6287241e033d247e9da5ff705dd6ef526bac39ae82f3d17de1b69f8cb313f9cd",

strip_prefix = "rules_docker-0.14.3",

urls = ["file://{你的路径}/rules_docker-v0.14.3.tar.gz"],

)然后检索rules_swift,修改urls(注意file://后不用漏了自己的“/”)

http_archive(

name = "build_bazel_rules_swift",

sha256 = "96a86afcbdab215f8363e65a10cf023b752e90b23abf02272c4fc668fcb70311",

urls = [

"file://{你的路径}/rules_swift.0.11.1.tar.gz"

# "https://github.com/bazelbuild/rules_swift/releases/download/0.11.1/rules_swift.0.11.1.tar.gz"

) # https://github.com/bazelbuild/rules_swift/releasesllvm有些特殊,打开根目录下/tensorflow下的 workspace.bzl ,修改对应位置链接即可(注意file://后不用漏了自己的“/”)

tf_http_archive(

name = "llvm",

build_file = clean_dep("//third_party/llvm:llvm.autogenerated.BUILD"),

sha256 = "599b89411df88b9e2be40b019e7ab0f7c9c10dd5ab1c948cd22e678cc8f8f352",

strip_prefix = "llvm-7a7e03f906aada0cf4b749b51213fe5784eeff84",

urls = [

"file://{你的路径}/llvm-7a7e03f906aada0cf4b749b51213fe5784eeff84.tar.gz",

"https://mirror.bazel.build/github.com/llvm-mirror/llvm/archive/7a7e03f906aada0cf4b749b51213fe5784eeff84.tar.gz",

"https://github.com/llvm-mirror/llvm/archive/7a7e03f906aada0cf4b749b51213fe5784eeff84.tar.gz",

)然后重新返回tf源码根目录,执行

bazel build --config=v1 --local_ram_resources=2048 //tensorflow/tools/pip_package:build_pip_package又特么有新的包下不了!!!!华为云!!!!!我****

好的,喷错了,wget试了下,镜像的链接404了

另一个包pcre也也类似错误

那就(注:注意版本,1.15.0要求pcre是8.42,而1.15.5要求8.44)

wget https://github.com/pybind/pybind11/archive/v2.3.0.tar.gz --no-check-certificate

wget https://ftp.exim.org/pub/pcre/pcre-8.44.tar.gz --no-check-certificate然后在/tensorflow下的 workspace.bzl 修改对应位置链接即可(记得校验sha265码)

现在执行(提示:先确认numpy版本是否小于1.19,原因见下面那个报错)

pip install 'numpy<1.19.0'

bazel build --config=v1 --local_ram_resources=2048 //tensorflow/tools/pip_package:build_pip_package无事发生,世界和平,peace

备注:(运行时间很长,不知道把--local_ram_resources=2048调大会不会好些)

两小时后,突然报错

ERROR: /home/dev211/ljx/make_tf/tensorflow-r1.15/tensorflow/python/BUILD:329:1: C++ compilation of rule '//tensorflow/python:bfloat16_lib' failed (Exit 1)

tensorflow/python/lib/core/bfloat16.cc: In function 'bool tensorflow::{anonymous}::Initialize()':

tensorflow/python/lib/core/bfloat16.cc:634:36: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [6], <unresolved overloaded function type>, const std::array<int, 3>&)'

compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

tensorflow/python/lib/core/bfloat16.cc:638:36: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [10], <unresolved overloaded function type>, const std::array<int, 3>&)'

compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

tensorflow/python/lib/core/bfloat16.cc:641:77: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [5], <unresolved overloaded function type>, const std::array<int, 3>&)'

if (!register_ufunc("less", CompareUFunc<Bfloat16LtFunctor>, compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

tensorflow/python/lib/core/bfloat16.cc:645:36: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [8], <unresolved overloaded function type>, const std::array<int, 3>&)'

compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

tensorflow/python/lib/core/bfloat16.cc:649:36: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [11], <unresolved overloaded function type>, const std::array<int, 3>&)'

compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

tensorflow/python/lib/core/bfloat16.cc:653:36: error: no match for call to '(tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>) (const char [14], <unresolved overloaded function type>, const std::array<int, 3>&)'

compare_types)) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: candidate: tensorflow::{anonymous}::Initialize()::<lambda(const char*, PyUFuncGenericFunction, const std::array<int, 3>&)>

const std::array<int, 3>& types) {

tensorflow/python/lib/core/bfloat16.cc:608:60: note: no known conversion for argument 2 from '<unresolved overloaded function type>' to 'PyUFuncGenericFunction {aka void (*)(char**, const long int*, const long int*, void*)}'

Target //tensorflow/tools/pip_package:build_pip_package failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 10093.129s, Critical Path: 5397.43s

INFO: 18440 processes: 18440 local.

FAILED: Build did NOT complete successfully参考一些链接,说是numpy1.19或更高导致了这个问题

https:// github.com/tensorflow/t ensorflow/issues/41584 有个评论提及下面两个workaround:

https:// github.com/tensorflow/t ensorflow/issues/41086#issuecomment-656833081 评论说不work

https:// github.com/tensorflow/t ensorflow/issues/41061#issuecomment-662222308 最终work

故一定要(不知为何,我的内心现在古井无波):

pip install 'numpy<1.19.0'备注:由于卸载了高版本numpy,然后就出现了上文 服务器上的离奇问题碎碎念 2 bash:/{}/python:bad interpreter: invalid argument 的报错,于是一顿复制粘贴,pip跟python又正常了

然后继续build

我又要闹了,还是same question,别人都ok了,我的还是不行,于是还是老老实实参考评论说不work 的方法: https:// github.com/tensorflow/t ensorflow/issues/41086#issuecomment-656833081

修改tf源码根目录下的tensorflow\python\lib\core\ http:// bfloat16.cc ,给两个函数传参的位置加上const

Around line # 508, is the function CompareUFunc, add "const" to the parameters, like this:

template

void CompareUFunc(char** args, npy_intp const* dimensions, npy_intp const* steps,

void* data) {

BinaryUFunc<bfloat16, npy_bool, Functor>(args, dimensions, steps, data);

}Line # 493, is the function BinaryUFunc add "const" here too:

template <typename InType, typename OutType, typename Functor>

void BinaryUFunc(char** args, npy_intp const* dimensions, npy_intp const* steps,

void* data) {另外还需要把numpy还原回最新版,不然会报错

F tensorflow/python/lib/core/bfloat16.cc:675] Check failed: PyBfloat16_Type.tp_base != nullptr然后再build,成功结束

叹口气

没什么事,就是喝口水再继续写

生成whl文件

上图的路径在tf源码的根目录下,于是还是在根目录下执行:

./bazel-bin/tensorflow/tools/pip_package/build_pip_package {你想把whl文件生成在哪里就写哪}成功获得whl文件

pip安装tf的whl文件

这个就正常

pip install tensorflow-1.15.5-cp37-cp37m-linux_aarch64.whl期间pip又会把之前的一些包卸载重安,然后导致这个报错

(ljx2) [dev211@npu-7 tf_whl]$ python

Python 3.7.12 | packaged by conda-forge | (default, Oct 26 2021, 06:22:24)

[GCC 9.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

RuntimeError: module compiled against API version 0xe but this version of numpy is 0xd

ImportError: numpy.core.multiarray failed to import