在CUDA的Drive API中launch kernel 函数原型如下:CUresult CUDAAPI cuLaunchKernel(CUfunction f,

unsigned int gridDimX,

unsigned int gridDimY,

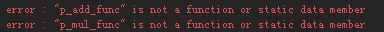

cuda的global函数里面可以调用__device__函数,在有特殊需要的时候,还可以把__device__函数作为参数传入到一个__global__函数中

在cuda里面不能像c++那样简单地传入函数的指针,需要在传入前对函数的指针做一些包装。

typedef double(*funcFormat)(int,char);

这里面double表示函数的返回值,int,char是函数的参数列表,所有满足这种格式的函数都可以用这种函数类型指代。

上面的funcFormat是一种函数类型,在这里可以把

透视变换是图像处理中的一种常用技术,用于模拟相机对图像进行透视投影。在 CUDA 中实现透视变换需要编写一个 GPU 函数,该函数可以并行地处理图像中的每个像素。

以下是一个简单的 CUDA 透视变换的例子:

__global__ void perspective_transform(float *out, float *in, int width, int height, float fx, float fy, float cx, float cy)

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

if (i >= width || j >= height) return;

int idx = j * width + i;

float x = (i - cx) / fx;

float y = (j - cy) / fy;

out[idx] = in[idx] / (1 + x*x + y*y);

int main()

dim3 blockSize(16, 16);

dim3 gridSize((width + blockSize.x - 1) / blockSize.x, (height + blockSize.y - 1) / blockSize.y);

perspective_transform<<<gridSize, blockSize>>>(d_out, d_in, width, height, fx, fy, cx, cy);

这个例子中的 `perspective_transform` 函数是一个 CUDA 内核,它接收图像数据、图像的宽度和高度、以及透视变换的参数。它通过计算每个像素的新坐标并对其进行透视变换,实现了透视变换。

请注意,这只是一个简单的透视变换的例子,具体的实现方式可能因应用场景不同而有所差异。